Halting problem

In computability theory, the halting problem can be stated as follows: Given a description of a computer program, decide whether the program finishes running or continues to run forever. This is equivalent to the problem of deciding, given a program and an input, whether the program will eventually halt when run with that input, or will run forever.

Alan Turing proved in 1936 that a general algorithm to solve the halting problem for all possible program-input pairs cannot exist. A key part of the proof was a mathematical definition of a computer and program, what became known as a Turing machine; the halting problem is undecidable over Turing machines. It is one of the first examples of a decision problem.

Jack Copeland (2004) attributes the actual term halting problem to Martin Davis.[1]

Introduction

The halting problem is a decision problem about properties of computer programs on a fixed Turing-complete model of computation, i.e. all programs that can be written in some given programming language that is general enough to be equivalent to a Turing machine. The problem is to determine, given a program and an input to the program, whether the program will eventually halt when run with that input. In this abstract framework, there are no resource limitations on the amount of memory or time required for the program's execution; it can take arbitrarily long, and use arbitrarily much storage space, before halting. The question is simply whether the given program will ever halt on a particular input.

For example, in pseudocode, the program

- while True: continue

does not halt; rather, it goes on forever in an infinite loop. On the other hand, the program

- print "Hello World!"

halts very quickly.

A more complex program might be harder to analyze. The program could be run for some fixed time. If the program does not halt, there is in general no way to know if the program will eventually halt or run forever. Turing proved there is no algorithm which can be applied to any arbitrary program and input to decide whether the program stops when run with that input.

Importance and consequences

The halting problem is historically important because it was one of the first problems to be proved undecidable. (Turing's proof went to press in May 1936, whereas Alonzo Church's proof of the undecidability of a problem in the lambda calculus had already been published in April 1936.) Subsequently, many other undecidable problems have been described; the typical method of proving a problem to be undecidable is with the technique of reduction. To do this, it is sufficient to show that if a solution to the new problem were found, it could be used to decide an undecidable problem by transforming instances of the undecidable problem into instances of the new problem. Since we already know that no method can decide the old problem, no method can decide the new problem either. Often the new problem is reduced to solving the halting problem.

For example, one such consequence of the halting problem's undecidability is that there cannot be a general algorithm that decides whether a given statement about natural numbers is true or not. The reason for this is that the proposition stating that a certain algorithm will halt given a certain input can be converted into an equivalent statement about natural numbers. If we had an algorithm that could solve every statement about natural numbers, it could certainly solve this one; but that would determine whether the original program halts, which is impossible, since the halting problem is undecidable.

Rice's theorem generalizes the theorem that the halting problem is unsolvable. It states that any non-trivial property of the partial function that is implemented by a program is undecidable. (A partial function is a function which may not always produce a result, and so is used to model programs, which can either produce results or fail to halt.) For example, the property "halt for the input 0" is undecidable. Note that this theorem holds only for properties of the partial function implemented by the program; Rice's Theorem does not apply to properties of the program itself. For example, "halt on input 0 within 100 steps" is not a property of the partial function that is implemented by the program—it is a property of the program implementing the partial function and is very much decidable.

Gregory Chaitin has defined a halting probability, represented by the symbol Ω, a type of real number that informally is said to represent the probability that a randomly produced program halts. These numbers have the same Turing degree as the halting problem. It is a normal and transcendental number which can be defined but cannot be completely computed. This means one can prove that there is no algorithm which produces the digits of Ω, although its first few digits can be calculated in simple cases.

While Turing's proof shows that there can be no general method or algorithm to determine whether algorithms halt, individual instances of that problem may very well be susceptible to attack. Given a specific algorithm, one can often show that it must halt for any input, and in fact computer scientists often do just that as part of a correctness proof. But each proof has to be developed specifically for the algorithm at hand; there is no mechanical, general way to determine whether algorithms on a Turing machine halt. However, there are some heuristics that can be used in an automated fashion to attempt to construct a proof, which succeed frequently on typical programs. This field of research is known as automated termination analysis.

Since the negative answer to the halting problem shows that there are problems that cannot be solved by a Turing machine, the Church–Turing thesis limits what can be accomplished by any machine that implements effective methods. However, not all machines conceivable to human imagination are subject to the Church–Turing thesis (e.g. oracle machines are not). It is an open question whether there can be actual deterministic physical processes that, in the long run, elude simulation by a Turing machine, and in particular whether any such hypothetical process could usefully be harnessed in the form of a calculating machine (a hypercomputer) that could solve the halting problem for a Turing machine amongst other things. It is also an open question whether any such unknown physical processes are involved in the working of the human brain, and whether humans can solve the halting problem.[2]

Representing the halting problem as a set

The conventional representation of decision problems is the set of objects possessing the property in question. The halting set

- K := { (i, x) | program i will eventually halt if run with input x}

represents the halting problem.

This set is recursively enumerable, which means there is a computable function that lists all of the pairs (i, x) it contains. However, the complement of this set is not recursively enumerable.

There are many equivalent formulations of the halting problem; any set whose Turing degree equals that of the halting problem is such a formulation. Examples of such sets include:

- { i | program i eventually halts when run with input 0 }

- { i | there is an input x such that program i eventually halts when run with input x }.

Sketch of proof

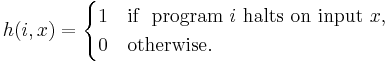

The proof[3] shows there is no total computable function that decides whether an arbitrary program i halts on arbitrary input x; that is, the following function h is not computable:

Here program i refers to the i th program in an enumeration of all the programs of a fixed Turing-complete model of computation.

| f(i,j) | i | i | i | i | i | i | |

| 1 | 2 | 3 | 4 | 5 | 6 | ||

| j | 1 | 1 | 0 | 0 | 1 | 0 | 1 |

| j | 2 | 0 | 0 | 0 | 1 | 0 | 0 |

| j | 3 | 0 | 1 | 0 | 1 | 0 | 1 |

| j | 4 | 1 | 0 | 0 | 1 | 0 | 0 |

| j | 5 | 0 | 0 | 0 | 1 | 1 | 1 |

| j | 6 | 1 | 1 | 0 | 0 | 1 | 0 |

| f(i,i) | 1 | 0 | 0 | 1 | 1 | 0 | |

| g(i) | U | 0 | 0 | U | U | 0 | |

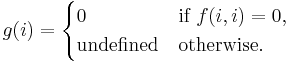

The proof proceeds by directly establishing that every total computable function with two arguments differs from the required function h. To this end, given any total computable binary function f, the following partial function g is also computable by some program e:

The verification that g is computable relies on the following constructs (or their equivalents):

- computable subprograms (the program that computes f is a subprogram in program e),

- duplication of values (program e computes the inputs i,i for f from the input i for g),

- conditional branching (program e selects between two results depending on the value it computes for f(i,i)),

- not producing a defined result (for example, by looping forever),

- returning a value of 0.

The following pseudocode illustrates a straightforward way to compute g:

procedure compute_g(i): if f(i,i) == 0 then return 0 else loop forever

Because g is partial computable, there must be a program e that computes g, by the assumption that the model of computation is Turing-complete. This program is one of all the programs on which the halting function h is defined. The next step of the proof shows that h(e,e) will not have the same value as f(e,e).

It follows from the definition of g that exactly one of the following two cases must hold:

- g(e) = f(e,e) = 0. In this case h(e,e) = 1, because program e halts on input e.

- g(e) is undefined and f(e,e) ≠ 0. In this case h(e,e) = 0, because program e does not halt on input e.

In either case, f cannot be the same function as h. Because f was an arbitrary total computable function with two arguments, all such functions must differ from h.

This proof is analogous to Cantor's diagonal argument. One may visualize a two-dimensional array with one column and one row for each natural number, as indicated in the table above. The value of f(i,j) is placed at column i, row j. Because f is assumed to be a total computable function, any element of the array can be calculated using f. The construction of the function g can be visualized using the main diagonal of this array. If the array has a 0 at position (i,i), then g(i) is 0. Otherwise, g(i) is undefined. The contradiction comes from the fact that there is some column e of the array corresponding to g itself. If f were the halting function h, there would be a 1 at position (e,e) if and only if g(e) is defined. But g is constructed so that g(e) is defined if and only if there is a 0 in position (e,e).

Common pitfalls

The difficulty in the halting problem lies in the requirement that the decision procedure must work for all programs and inputs. A particular program either halts on a given input or does not halt. Consider one algorithm that always answers "halts" and another that always answers "doesn't halt." For any specific program and input, one of these two algorithms answers correctly, even though nobody may know which one.

There are programs (interpreters) that simulate the execution of whatever source code they are given. Such programs can demonstrate that a program does halt if this is the case: the interpreter itself will eventually halt its simulation, which shows that the original program halted. However, an interpreter will not halt if its input program does not halt, so this approach cannot solve the halting problem as stated. It does not successfully answer "doesn't halt" for programs that do not halt.

The halting problem is theoretically decidable for linear bounded automata (LBAs) or deterministic machines with finite memory. A machine with finite memory has a finite number of states, and thus any deterministic program on it must eventually either halt or repeat a previous state:

- "...any finite-state machine, if left completely to itself, will fall eventually into a perfectly periodic repetitive pattern. The duration of this repeating pattern cannot exceed the number of internal states of the machine..."(italics in original, Minsky 1967, p. 24)

Minsky warns us, however, that machines such as computers with e.g. a million small parts, each with two states, will have on the order of 21,000,000 possible states:

- "This is a 1 followed by about three hundred thousand zeroes ... Even if such a machine were to operate at the frequencies of cosmic rays, the aeons of galactic evolution would be as nothing compared to the time of a journey through such a cycle" (Minsky p. 25)

Minsky exhorts the reader to be suspicious—although a machine may be finite, and finite automata "have a number of theoretical limitations":

- "...the magnitudes involved should lead one to suspect that theorems and arguments based chiefly on the mere finiteness [of] the state diagram may not carry a great deal of significance" (ibid).

For more on this issue of "intractability" see Busy beaver.

It can also be decided automatically whether a nondeterministic machine with finite memory halts on none of, some of, or all of the possible sequences of nondeterministic decisions, by enumerating states after each possible decision.

Formalization of the halting problem

In his original proof Turing formalized the concept of algorithm by introducing Turing machines. However, the result is in no way specific to them; it applies equally to any other model of computation that is equivalent in its computational power to Turing machines, such as Markov algorithms, Lambda calculus, Post systems, register machines, or tag systems.

What is important is that the formalization allows a straightforward mapping of algorithms to some data type that the algorithm can operate upon. For example, if the formalism lets algorithms define functions over strings (such as Turing machines) then there should be a mapping of these algorithms to strings, and if the formalism lets algorithms define functions over natural numbers (such as computable functions) then there should be a mapping of algorithms to natural numbers. The mapping to strings is usually the most straightforward, but strings over an alphabet with n characters can also be mapped to numbers by interpreting them as numbers in an n-ary numeral system.

Relationship with Gödel's incompleteness theorem

The concepts raised by Gödel's incompleteness theorems are very similar to those raised by the halting problem, and the proofs are quite similar. In fact, a weaker form of the First Incompleteness Theorem is an easy consequence of the undecidability of the halting problem. This weaker form differs from the standard statement of the incompleteness theorem by asserting that a complete, consistent and sound axiomatization of all statements about natural numbers is unachievable. The "sound" part is the weakening: it means that we require the axiomatic system in question to prove only true statements about natural numbers (it's very important to observe that the statement of the standard form of Gödel's First Incompleteness Theorem is completely unconcerned with the question of truth, and only concerns formal provability).

The weaker form of the theorem can be proven from the undecidability of the halting problem as follows. Assume that we have a consistent and complete axiomatization of all true first-order logic statements about natural numbers. Then we can build an algorithm that enumerates all these statements. This means that there is an algorithm N(n) that, given a natural number n, computes a true first-order logic statement about natural numbers such that, for all the true statements, there is at least one n such that N(n) yields that statement. Now suppose we want to decide if the algorithm with representation a halts on input i. By using Kleene's T predicate, we can express the statement "a halts on input i" as a statement H(a, i) in the language of arithmetic. Since the axiomatization is complete it follows that either there is an n such that N(n) = H(a, i) or there is an n' such that N(n') = ¬ H(a, i). So if we iterate over all n until we either find H(a, i) or its negation, we will always halt. This means that this gives us an algorithm to decide the halting problem. Since we know that there cannot be such an algorithm, it follows that the assumption that there is a consistent and complete axiomatization of all true first-order logic statements about natural numbers must be false.

Recognizing partial solutions

There are many programs that either return a correct answer to the halting problem or do not return an answer at all. If it were possible to decide whether any given program gives only correct answers, one might hope to collect a large number of such programs and run them in parallel, in the hope of being able to determine whether any programs halt. Curiously, recognizing such partial halting solvers (PHS) is just as hard as the halting problem itself.

Suppose someone claims that program PHSR is a partial halting solver recognizer. Construct a program H:

input a program P X := "input Q. if Q = P output 'halts' else loop forever" run PHSR with X as input

If PHSR recognizes the constructed program X as a partial halting solver, that means that P, the only input for which X produces a result, halts. If PHSR fails to recognize X, then it must be because P does not halt. Therefore H can decide whether an arbitrary program P halts; it solves the halting problem. Since this is impossible, the program PHSR could not have been a partial halting solver recognizer as claimed. Therefore, no program can be a complete partial halting solver recognizer.

Another example, HT, of a Turing machine which gives correct answers only for some instances of the halting problem can be described by the requirements that, if HT is started scanning a field which carries the first of a finite string of a consecutive "1"s, followed by one field with symbol "0" (i. e. a blank field), and followed in turn by a finite string of i consecutive "1"s, on an otherwise blank tape, then

- HT halts for any such starting state, i. e. for any input of finite positive integers a and i;

- HT halts on a completely blank tape if and only if the Turing machine represented by a does not halt when given the starting state and input represented by i; and

- HT halts on a nonblank tape, scanning an appropriate field (which however does not necessarily carry the symbol "1") if and only if the Turing machine represented by a does halt when given the starting state and input represented by i. In this case, the final state in which HT halted (contents of the tape, and field being scanned) shall be equal to some particular intermediate state which the Turing machine represented by a attains when given the starting state and input represented by i; or, if all those intermediate states (including the starting state represented by i) leave the tape blank, then the final state in which HT halted shall be scanning a "1" on an otherwise blank tape.

While its existence has not been refuted (essentially: because there's no Turing machine which would halt only if started on a blank tape), such a Turing machine HT would solve the halting problem only partially either (because it doesn't necessarily scan the symbol "1" in the final state, if the Turing machine represented by a does halt when given the starting state and input represented by i, as explicit statements of the halting problem for Turing machines may require).

History of the halting problem

- 1900 – David Hilbert poses his "23 questions" (now known as Hilbert's problems) at the Second International Congress of Mathematicians in Paris. "Of these, the second was that of proving the consistency of the 'Peano axioms' on which, as he had shown, the rigour of mathematics depended" (Hodges p. 83, Davis' commentary in Davis, 1965, p. 108).

- 1920–1921 – Emil Post explores the halting problem for tag systems, regarding it as a candidate for unsolvability. (Source: Absolutely unsolvable problems and relatively undecidable propositions – account of an anticipation, in Davis, 1965, pp. 340–433.) Its unsolvability was not established until much later, by Marvin Minsky [1961].

- 1928—Hilbert recasts his 'Second Problem' at the Bologna International Congress (cf Reid pp. 188–189). Hodges claims he posed three questions: i.e. #1: Was mathematics complete? #2: Was mathematics consistent? #3: Was mathematics decidable? (Hodges p. 91). The third question is known as the Entscheidungsproblem (Decision Problem) (Hodges p. 91, Penrose p. 34)

- 1930 – Kurt Gödel announces a proof as an answer to the first two of Hilbert's 1928 questions [cf Reid p. 198]. "At first he [Hilbert] was only angry and frustrated, but then he began to try to deal constructively with the problem... Gödel himself felt – and expressed the thought in his paper – that his work did not contradict Hilbert's formalistic point of view" (Reid p. 199).

- 1931—Gödel publishes "On Formally Undecidable Propositions of Principia Mathematica and Related Systems I", (reprinted in Davis, 1965, p. 5ff)

- 19 April 1935 – Alonzo Church publishes "An Unsolvable Problem of Elementary Number Theory", wherein he identifies what it means for a function to be effectively calculable. Such a function will have an algorithm, and "...the fact that the algorithm has terminated becomes effectively known ..." (italics added, Davis, 1965, p. 100).

- 1936—Church publishes the first proof that the Entscheidungsproblem is unsolvable [A Note on the Entscheidungsproblem, reprinted in Davis, 1965, p. 110].

- 7 October 1936 – Emil Post's paper "Finite Combinatory Processes. Formulation I" is received. Post adds to his "process" an instruction "(C) Stop". He called such a process "type 1 ... if the process it determines terminates for each specific problem." (Davis, 1965, p. 289ff)

- 1937 – Alan Turing's paper On Computable Numbers With an Application to the Entscheidungsproblem reaches print in January 1937 (reprinted in Davis, 1965, p. 115). Turing's proof departs from calculation by recursive functions and introduces the notion of computation by machine. Stephen Kleene (1952) refers to this as one of the "first examples of decision problems proved unsolvable".

- 1939 – J. Barkley Rosser observes the essential equivalence of "effective method" defined by Gödel, Church, and Turing (Rosser in Davis, 1965, p. 273, "Informal Exposition of Proofs of Gödel's Theorem and Church's Theorem").

- 1943—In a paper, Stephen Kleene states that "In setting up a complete algorithmic theory, what we do is describe a procedure ... which procedure necessarily terminates and in such manner that from the outcome we can read a definite answer, 'Yes' or 'No,' to the question, 'Is the predicate value true?'."

- 1952—Kleene (1952) Chapter XIII ("Computable Functions") includes a discussion of the unsolvability of the halting problem for Turing machines and reformulates it in terms of machines that "eventually stop", i.e. halt: "... there is no algorithm for deciding whether any given machine, when started from any given situation, eventually stops." (Kleene (1952) p.382)

- 1952 – "Davis [ Martin Davis ] thinks it likely that he first used the term 'halting problem' in a series of lectures that he gave at the Control Systems Laboratory at the University of Illinois in 1952 (letter from Davis to Copeland, 12 Dec. 2001.)" (Footnote 61 in Copeland (2004) pp.40ff)

See also

Notes

- ^ In none of his work did Turing use the word "halting" or "termination". Turing's biographer Hodges does not have the word "halting" or words "halting problem" in his index. The earliest known use of the words "halting problem" is in a proof by Davis (p. 70–71, Davis 1958):

- "Theorem 2.2 There exists a Turing machine whose halting problem is recursively unsolvable.

- "A related problem is the printing problem for a simple Turing machine Z with respect to a symbol Si" (p. 70).

- "The halting problem was so named (and it appears, first stated) by Martin Davis [cf Copeland footnote 61]... (It is often said that Turing stated and proved the halting theorem in 'On Computable Numbers', but strictly this is not true)." (p. 40)

- ^ B. Jack Copeland, Computation in Luciano Floridi (ed.), The Blackwell guide to the philosophy of computing and information, Wiley-Blackwell, 2004, ISBN 0-631-22919-1, p. 15

- ^ This proof can be found, for example, in Penrose (1989). The Emporer's New Mind. Oxford UK: Oxford University Press. pp. 57–63. ISBN 0-19-851973-7.

References

- Alan Turing, On computable numbers, with an application to the Entscheidungsproblem, Proceedings of the London Mathematical Society, Series 2, 42 (1936), pp 230–265. online version This is the epochal paper where Turing defines Turing machines, formulates the halting problem, and shows that it (as well as the Entscheidungsproblem) is unsolvable.

- Sipser, Michael (2006). "Section 4.2: The Halting Problem". Introduction to the Theory of Computation (Second Edition ed.). PWS Publishing. pp. pp.173–182. ISBN 053494728X.

- Wiki:HaltingProblem

- B. Jack Copeland ed. (2004), The Essential Turing: Seminal Writings in Computing, Logic, Philosophy, Artificial Intelligence, and Artificial Life plus The Secrets of Enigma, Clarendon Press (Oxford University Press), Oxford UK, ISBN 0-19-825079-7.

- Davis, Martin (1965). The Undecidable, Basic Papers on Undecidable Propositions, Unsolvable Problems And Computable Functions. New York: Raven Press.. Turing's paper is #3 in this volume. Papers include those by Godel, Church, Rosser, Kleene, and Post.

- Davis, Martin (1958). Computability and Unsolvability. New York: McGraw-Hill..

- Alfred North Whitehead and Bertrand Russell, Principia Mathematica to *56, Cambridge at the University Press, 1962. Re: the problem of paradoxes, the authors discuss the problem of a set not be an object in any of its "determining functions", in particular "Introduction, Chap. 1 p. 24 "...difficulties which arise in formal logic", and Chap. 2.I. "The Vicious-Circle Principle" p.37ff, and Chap. 2.VIII. "The Contradictions" p. 60ff.

- Martin Davis, "What is a computation", in Mathematics Today, Lynn Arthur Steen, Vintage Books (Random House), 1980. A wonderful little paper, perhaps the best ever written about Turing Machines for the non-specialist. Davis reduces the Turing Machine to a far-simpler model based on Post's model of a computation. Discusses Chaitin proof. Includes little biographies of Emil Post, Julia Robinson.

- Marvin Minsky, Computation, Finite and Infinite Machines, Prentice-Hall, Inc., N.J., 1967. See chapter 8, Section 8.2 "The Unsolvability of the Halting Problem." Excellent, i.e. readable, sometimes fun. A classic.

- Roger Penrose, The Emperor's New Mind: Concerning computers, Minds and the Laws of Physics, Oxford University Press, Oxford England, 1990 (with corrections). Cf: Chapter 2, "Algorithms and Turing Machines". An overly-complicated presentation (see Davis's paper for a better model), but a thorough presentation of Turing machines and the halting problem, and Church's Lambda Calculus.

- John Hopcroft and Jeffrey Ullman, Introduction to Automata Theory, Languages and Computation, Addison-Wesley, Reading Mass, 1979. See Chapter 7 "Turing Machines." A book centered around the machine-interpretation of "languages", NP-Completeness, etc.

- Andrew Hodges, Alan Turing: The Enigma, Simon and Schuster, New York. Cf Chapter "The Spirit of Truth" for a history leading to, and a discussion of, his proof.

- Constance Reid, Hilbert, Copernicus: Springer-Verlag, New York, 1996 (first published 1970). Fascinating history of German mathematics and physics from 1880s through 1930s. Hundreds of names familiar to mathematicians, physicists and engineers appear in its pages. Perhaps marred by no overt references and few footnotes: Reid states her sources were numerous interviews with those who personally knew Hilbert, and Hilbert's letters and papers.

- Edward Beltrami, What is Random? Chance and order in mathematics and life, Copernicus: Springer-Verlag, New York, 1999. Nice, gentle read for the mathematically-inclined non-specialist, puts tougher stuff at the end. Has a Turing-machine model in it. Discusses the Chaitin contributions.

- Ernest Nagel and James R. Newman, Godel’s Proof, New York University Press, 1958. Wonderful writing about a very difficult subject. For the mathematically-inclined non-specialist. Discusses Gentzen's proof on pages 96–97 and footnotes. Appendices discuss the Peano Axioms briefly, gently introduce readers to formal logic.

- Taylor Booth, Sequential Machines and Automata Theory, Wiley, New York, 1967. Cf Chapter 9, Turing Machines. Difficult book, meant for electrical engineers and technical specialists. Discusses recursion, partial-recursion with reference to Turing Machines, halting problem. Has a Turing Machine model in it. References at end of Chapter 9 catch most of the older books (i.e. 1952 until 1967 including authors Martin Davis, F. C. Hennie, H. Hermes, S. C. Kleene, M. Minsky, T. Rado) and various technical papers. See note under Busy-Beaver Programs.

- Busy Beaver Programs are described in Scientific American, August 1984, also March 1985 p. 23. A reference in Booth attributes them to Rado, T.(1962), On non-computable functions, Bell Systems Tech. J. 41. Booth also defines Rado's Busy Beaver Problem in problems 3, 4, 5, 6 of Chapter 9, p. 396.

- David Bolter, Turing’s Man: Western Culture in the Computer Age, The University of North Carolina Press, Chapel Hill, 1984. For the general reader. May be dated. Has yet another (very simple) Turing Machine model in it.

- Stephen Kleene, Introduction to Metamathematics, North-Holland, 1952. Chapter XIII ("Computable Functions") includes a discussion of the unsolvability of the halting problem for Turing machines. In a departure from Turing's terminology of circle-free nonhalting machines, Kleene refers instead to machines that "stop", i.e. halt.

External links

- Scooping the loop snooper - a poetic proof of undecidability of the halting problem